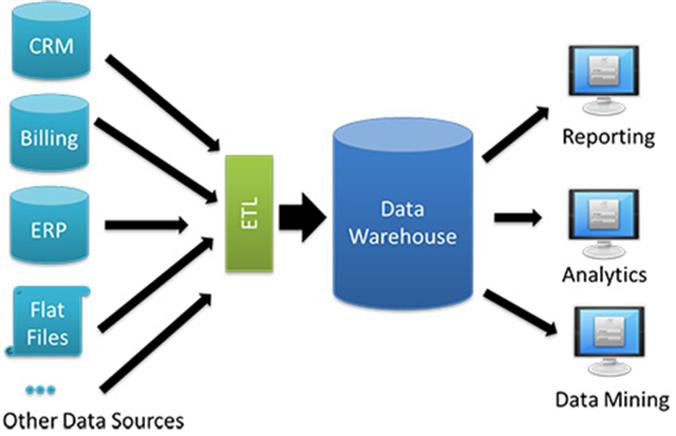

Once you’ve decided that your organization is ready for BI and that you’ve identified your KPIs according to the requirements set out in an earlier post, it’s time to get down to the implementation business. The following are best practices in the application of the BI infrastructure. Refer to Figure below if you need to refresh your memory for the BI process.

Take an Iterative Implementation Approach

The iterative approach is ideally suited to implementing the BI infrastructure. BI projects that take six months or more to develop as a whole are highly likely to fail. Incremental implementation of the operating portions of the BI infrastructure over shorter periods of time helps. This ensures that the project remains on schedule while creating momentum and offering a faster return on investment.

Utilize a Data Warehouse/Data Mart

Users should not build their self-service content directly from transactional data stores. Instead, a data warehouse or a collection of data marts could be built to store a copy of the transaction data. This means that a business consumer is unable to stop the line-of-business systems by a poorly performing query.

Data Mart: brings together data that is limited to a particular subject area/department.

Transactional data copying may also be restructured from a format optimized for transactional processing to a format optimized for analytical processing. This helps ensure the efficient operation of a self-service analytics environment. In addition, data from several line-of-business systems can be pooled to allow analysis across the entire organization.

Cleanse and Validate During Data Gathering

Data should be cleaned and checked as much as possible as is collected in the data warehouse or data marts. It shall contain the following tasks:

- Validating against lists of known entities such as streets, cities, states, ZIP codes, gender, and so on.

- Make sure there are both sides of the relationship. For example, if sales are credited to a certain salesperson, make sure that the salesperson is present at the salesperson’s table in the warehouse.

- Testing data types and data lengths.

Any data that fails to verify the method should be treated in a graceful manner. It should be logged in an acceptable manner and probably saved to a separate location for manual cleaning and reloading. Notifications should be sent to the right people to let them know that a data validation problem has arisen.

Audits should be run to ensure the data loaded completely and accurately. This may include counting certain entities or totaling certain quantities

The source of each piece of information should be tracked through the extract, transform, and load (ETL)

Create User-Friendly Data Models

The databases are dynamic organisms. Technical aptitude and years of experience are required to navigate and retrieve data in these systems efficiently. Expecting the majority of business users to master the ins and outs of SELECT statements with INNER JOINs and GROUP BYs would lead to disgruntled users and inaccurate results.

A data model with a business user-friendly metadata layer needs to be set up. Full English space names should be used for all items exposed to business users.

In addition, the data model already knows how to make the proper connections inside the data and which connections will lead to ridiculous outcomes.

The data model will contain definitions for the KPI calculations agreed upon. Instead of re-creating these equations over and over in different reports and visualizations, a single description may be used in multiple reports. Finally, complex analysis, such as year-on-year growth or prorated percent of the target, may be predefined for ease of use.

No Comments Yet